Welcome to the Hardenize blog. This is where we will document our journey as we make the Internet a more secure place and have some fun and excitement along the way.

We're happy to announce the first release of public Hardenize APIs, which enable our customers to have direct control over their accounts. The main focus of today's release is to provide the essential API endpoints, as well as establish a strong foundation on top of which we will continue to build.

Ever since we first started working on Hardenize, we understood that having a good API is vitally important for our success. After all, what good is a lot of information if it's kept in a silo from which you can't get it out. But some things can't be rushed; in particular, you can't have an API without having some functionality first. And so we had to wait, patiently, until the time is right.

This blog post is not about our APIs as such, because you can learn all you want about it by reading our documentation. Instead, here we'll be telling you more about the path we took to evaluate the mainstream API approaches, our experiments, and how we arrived at the decisions we did.

We're big fans of REST, and we've had an internal REST-based API essentially since day one. However, when the time came to start building our public APIs, we looked around at the various possibilities to see if there is a better choice available. We considered two other options.

The first was gRPC, a popular RPC framework developed at Google. The advantage of gRPC is that it is quite fast (as it uses Protobuf as its default serialization protocol) and also generates skeleton client and server code. After an internal pilot, we ultimately decided that gRPC is not a good match for our use case. That said, we classified it as a great choice for machine-to-machine communication in a relatively controlled environment, especially where performance is very important.

Compared to REST, gRPC requires the use of client libraries, and has a steep learning curve. Another problem is that we wanted to be able to consume our APIs from our own web applications (we wanted one set of APIs for everything), and gRPC doesn't have a native browser library (even there's been some slow progress in that direction). In theory, it's possible to expose gRPC services via a REST bridge, but only via a third-party package and not natively. That seemed like a perfect solution, as it would get rid of the learning curve for our customers and give us browser access. We added the bridge to our build, but that made our architecture unnecessarily complicated and potentially fragile, and overall didn't feel right. Crucially, with this entire stack and on our platform (Java), development felt slow, unpleasant, and fragile. It also made iterating slow, and that was definitely one thing we didn't want to compromise on.

We also wanted to give GraphQL a chance. Its basic premise is that you replace a set of fixed API endpoints (as with REST) with a new data model that you couple with a query language that clients can use. This seems like a fantastic idea that addresses some issues with REST, mainly that 1) you have to transport all API endpoint data even when you need only small parts (overfetching) and at the same time 2) you often need to submit multiple API requests to get all the data you need (underfetching). The first issue increases network bandwidth consumption, the second increases the effective API latency; both slow down the API user.

We spent less time with GraphQL than with gRPC, mostly because it was more difficult to get up and running. We found the documentation for the Java GraphQL server implementation lacking. The most popular Java tutorial is out of date. Despite the frustration, we managed to complete our PoC but lost confidence in GraphQL in the process, at least when it comes to our needs and context.

There seem to be two main challenges with GraphQL. One is that, to use it properly, you have to implement a separate schema and data model, which are going to be different from whatever data model you already have. I am not saying that's necessarily a bad idea, but it's a lot of work and there are not many good guides and examples out there. The second problem is that the data fetching server-side is a black box. There's an engine that processes client queries and fetches the necessary data, but it's not clear what the resulting performance is going to be. In fact, many articles that discuss GraphQL mention performance problems in this area.

In the end, we abandoned GraphQL on the basis our initial experience and poor documentation. We still like the idea conceptually and may try it again in the future.

REST has a lot of going for it, the main thing being that everybody understands it, despite the fact that everyone has a slightly different definition for it. If you understand HTTP you understand REST, and there's a great deal of tools that you can start using in minutes. The barrier to entry is as low as it can be.

Building a REST API was straightforward, although it can in some situations feel that, whatever design choices you make, you're always going to be slightly unhappy. Mike said it best: whatever you do, it somehow always feels that you're doing it incorrectly.

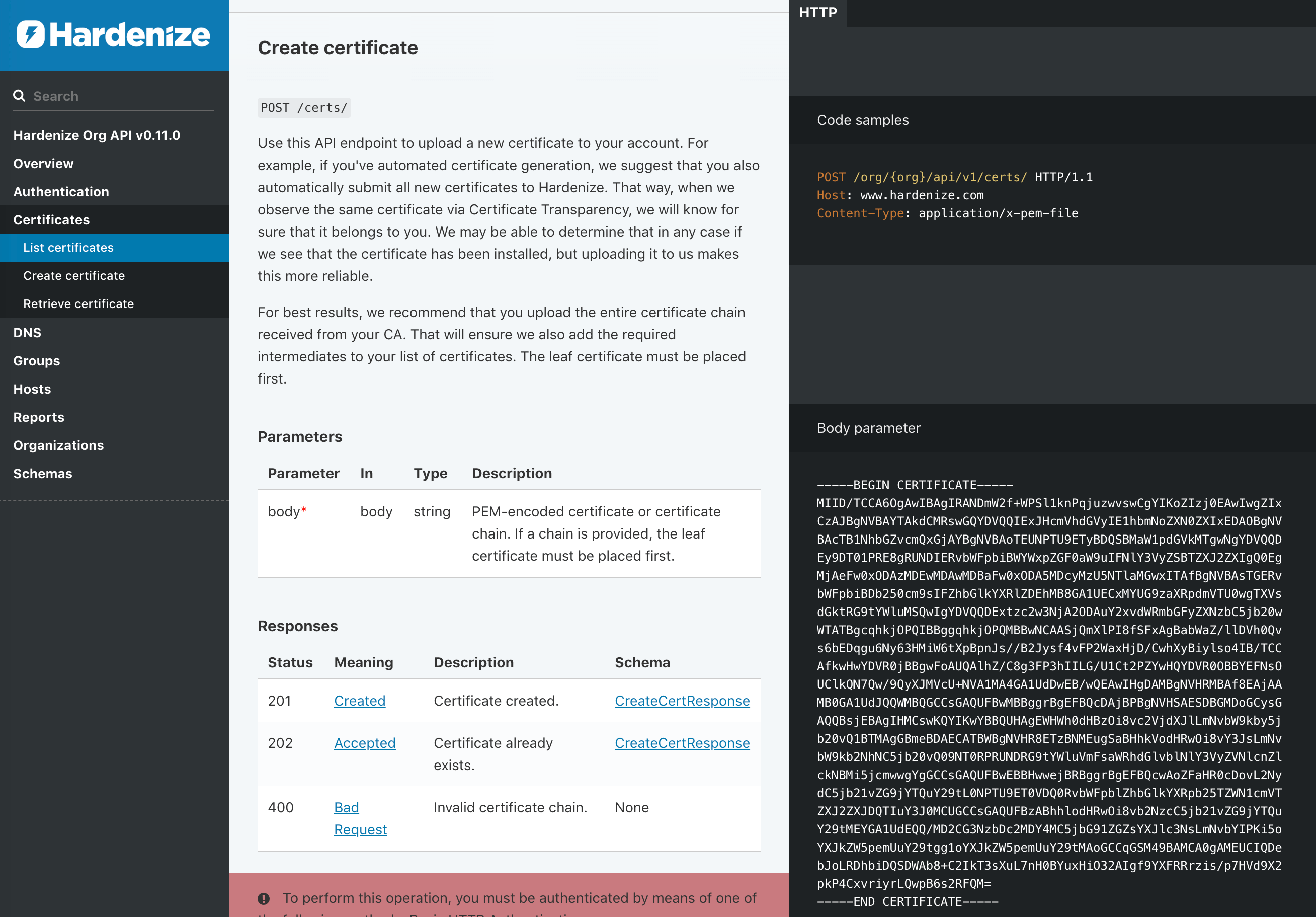

Another great thing about REST is that you can publish your API using the OpenAPI specification, allowing for automated documentation generation. Doing this felt great, even though we wrote the specification by hand. It's a delight to have great documentation. OpenAPI gives you a structure in which to work, effectively guiding you until you document everything properly. OpenAPI also allows door for automated client-side library generation, although we opted to write our own library by hand.

This being only our first release and all, our API is not yet complete; we'll be adding the remaining functionality in the following weeks. After that, we'll be developing our product APIs first. The main thing now is that we know how we're doing it, which is going to make everything much faster. Stay tuned.